I’m at the twenty-fifth Security Protocols Workshop, of which the theme is protocols with multiple objectives. I’ll try to liveblog the talks in followups to this post.

Category Archives: Security engineering

The University is Hiring

We’re looking for a Chief Information Security Officer. This isn’t a research post here at the lab, but across the yard in University Information Services, where they manage our networks and our administrative systems. There will be opportunities to work with security researchers like us, but the main task is protecting Cambridge from all sorts of online bad actors. If you would like to be in the thick of it, and you know what you’re doing, here’s how you can apply.

DigiTally

Last week I gave a keynote talk at CCS about DigiTally, a project we’ve been working on to extend mobile payments to areas where the network is intermittent, congested or non-existent.

The Bill and Melinda Gates Foundation called for ways to increase the use of mobile payments, which have been transformative in many less developed countries. We did some research and found that network availability and cost were the two main problems. So how could we do phone payments where there’s no network, with a marginal cost of zero? If people had smartphones you could use some combination of NFC, bluetooth and local wifi, but most of the rural poor in Africa and Asia use simple phones without any extra communications modalities, other than those which the users themselves can provide. So how could you enable people to do phone payments by simple user actions? We were inspired by the prepayment electricity meters I helped develop some twenty years ago; meters conforming to this spec are now used in over 100 countries.

We got a small grant from the Gates Foundation to do a prototype and field trial. We designed a system, Digitally, where Alice can pay Bob by exchanging eight-digit MACs that are generated, and verified, by the SIM cards in their phones. For rapid prototyping we used overlay SIMs (which are already being used in a different phone payment system in Africa). The cryptography is described in a paper we gave at the Security Protocols Workshop this spring.

Last month we took the prototype to Strathmore University in Nairobi to do a field trial involving usability studies in their bookshop, coffee shop and cafeteria. The results were very encouraging and I described them in my talk at CCS (slides). There will be a paper on this study in due course. We’re now looking for partners to do deployment at scale, whether in phone payments or in other apps that need to support value transfer in delay-tolerant networks.

There has been press coverage in the New Scientist, Engadget and Impress (original Japanese version).

Hacking the iPhone PIN retry counter

At our security group meeting on the 19th August, Sergei Skorobogatov demonstrated a NAND backup attack on an iPhone 5c. I typed in six wrong PINs and it locked; he removed the flash chip (which he’d desoldered and led out to a socket); he erased and restored the changed pages; he put it back in the phone; and I was able to enter a further six wrong PINs.

Sergei has today released a paper describing the attack.

During the recent fight between the FBI and Apple, FBI Director Jim Comey said this kind of attack wouldn’t work.

USENIX Security Best Paper 2016 – The Million Key Question … Origins of RSA Public Keys

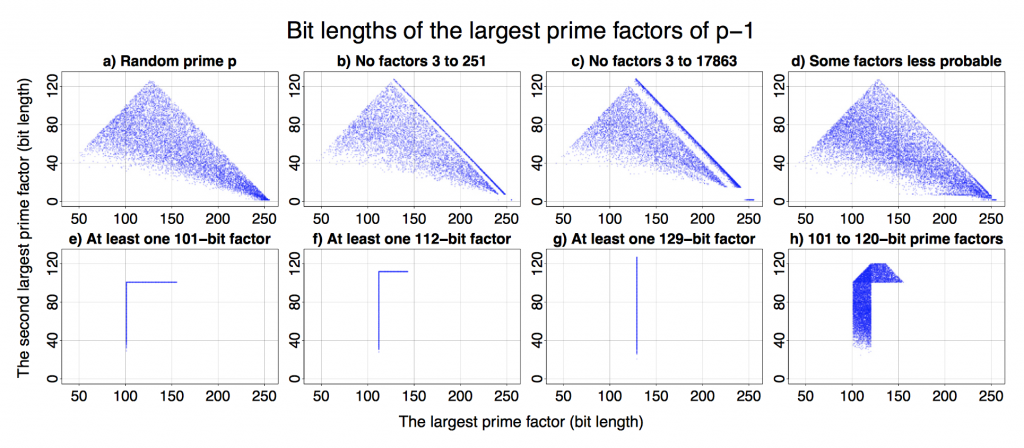

Petr Svenda et al from Masaryk University in Brno won the Best Paper Award at this year’s USENIX Security Symposium with their paper classifying public RSA keys according to their source.

I really like the simplicity of the original assumption. The starting point of the research was that different crypto/RSA libraries use slightly different elimination methods and “cut-off” thresholds to find suitable prime numbers. They thought these differences should be sufficient to detect a particular cryptographic implementation and all that was needed were public keys. Petr et al confirmed this assumption. The best paper award is a well-deserved recognition as I’ve worked with and followed Petr’s activities closely.

The authors created a method for efficient identification of the source (software library or hardware device) of RSA public keys. It resulted in a classification of keys into more than dozen categories. This classification can be used as a fingerprint that decreases the anonymity of users of Tor and other privacy enhancing mailers or operators.

All that is a result of an analysis of over 60 million freshly generated keys from 22 open- and closed-source libraries and from 16 different smart-cards. While the findings are fairly theoretical, they are demonstrated with a series of easy to understand graphs (see above).

I can’t see an easy way to exploit the results for immediate cyber attacks. However, we started looking into practical applications. There are interesting opportunities for enterprise compliance audits, as the classification only requires access to datasets of public keys – often created as a by-product of internal network vulnerability scanning.

An extended version of the paper is available from http://crcs.cz/rsa.

Yet another Android side channel: input stealing for fun and profit

At PETS 2016 we presented a new side-channel attack in our paper Don’t Interrupt Me While I Type: Inferring Text Entered Through Gesture Typing on Android Keyboards. This was part of Laurent Simon‘s thesis, and won him the runner-up to the best student paper award.

We found that software on your smartphone can infer words you type in other apps by monitoring the aggregate number of context switches and the number of hardware interrupts. These are readable by permissionless apps within the virtual procfs filesystem (mounted under /proc). Three previous research groups had found that other files under procfs support side channels. But the files they used contained information about individual apps– e.g. the file /proc/uid_stat/victimapp/tcp_snd contains the number of bytes sent by “victimapp”. These files are no longer readable in the latest Android version.

We found that the “global” files – those that contain aggregate information about the system – also leak. So a curious app can monitor these global files as a user types on the phone and try to work out the words. We looked at smartphone keyboards that support “gesture typing”: a novel input mechanism democratized by SwiftKey, whereby a user drags their finger from letter to letter to enter words.

This work shows once again how difficult it is to prevent side channels: they come up in all sorts of interesting and unexpected ways. Fortunately, we think there is an easy fix: Google should simply disable access to all procfs files, rather than just the files that leak information about individual apps. Meanwhile, if you’re developing apps for privacy or anonymity, you should be aware that these risks exist.

PETS 2016

I am at the Privacy Enhancing Technologies Symposium (PETS 2016) in Darmstadt until Friday, and will try to liveblog some of the sessions in followups to this post. (I can’t do them all as there are some parallel sessions.)

Royal Society report on cybersecurity research

The Royal Society has just published a report on cybersecurity research. I was a member of the steering group that tried to keep the policy team headed in the right direction. Its recommendation that governments preserve the robustness of encryption is welcome enough, given the new Russian law on access to crypto keys; it was nice to get, given the conservative nature of the Society. But I’m afraid the glass is only half full.

I was disappointed that the final report went along with the GCHQ line that security breaches should not be reported to affected data subjects, as in the USA, but to the agencies, as mandated in the EU’s NIS directive. Its call for an independent review of the UK’s cybersecurity needs may also achieve little. I was on John Beddington’s Blackett Review five years ago, and the outcome wasn’t published; it was mostly used to justify a budget increase for GCHQ. Its call for UK government work on standards is irrelevant post-Brexit; indeed standards made in Europe will probably be better without UK interference. Most of all, I cannot accept the report’s line that the government should help direct cybersecurity research. Most scientists agree that too much money already goes into directed programmes and not enough into responsive-mode and curiosity-driven research. In the case of security research there is a further factor: the stark conflict of interest between bona fide researchers, whose aim is that some of the people should enjoy some security and privacy some of the time, and agencies engaged in programmes such as Operation Bullrun whose goal is that this should not happen. GCHQ may want a “more responsive cybersecurity agenda”; but that’s the last thing people like me want them to have.

The report has in any case been overtaken by events. First, Brexit is already doing serious harm to research funding. Second, Brexit is also doing serious harm to the IT industry; we hear daily of listings posptoned, investments reconsidered and firms planning to move development teams and data overseas. Third, the Investigatory Powers bill currently before the House of Lords highlights the fact that surveillance debate in the West these days is more about access to data at rest and about whether the government can order firms to hack their customers.

While all three arms of the US government have drawn back on surveillance powers following the Snowden revelations, Theresa May has taken the hardest possible line. Her Investigatory Powers Bill will give her successors as Home Secretary sweeping powers to order firms in the UK to hand over data and help GCHQ hack their customers. Brexit will shield these powers from challenge in the European Court of Justice, making it much harder for a UK company to claim “adequacy” for its data protection arrangements in respect of EU data subjects. This will make it still less attractive for an IT company to keep in the UK either data that could be seized or engineering staff who could be coerced. I am seriously concerned that, together with Brexit, this will be the double whammy that persuades overseas firms not to invest in the UK, and that even causes some UK firms to leave. In the face of this massive self-harm, the measures suggested by the report are unlikely to help much.

Inter-ACE cyberchallenge at Cambridge

The best student hackers from the UK’s 13 Academic Centres of Excellence in Cyber Security Research are coming to Cambridge for the first Inter-ACE Cyberchallenge tomorrow, Saturday 23 April 2016.

Security Protocols 2016

I’m at the 24th security protocols workshop in Brno (no, not Borneo, as a friend misheard it, but in the Czech republic; a two-hour flight rather than a twenty-hour one). We ended up being bumped to an old chapel in the Mendel museum, a former monastery where the monk Gregor Mendel figured out genetics from the study of peas, and for the prosaic reason that the Canadian ambassador pre-empted our meeting room. As a result we had no wifi and I have had to liveblog from the pub, where we are having lunch. The session liveblogs will be in followups to this post, in the usual style.