I’ll be liveblogging the Workshop on Security and Human behaviour, which Alice Hutchings and I are having to run online once more this year. My liveblogs will appear as followups to this post. Edited to add: Here are the videos for sessions 1, 2, 3, 4, 5 and 6.

Category Archives: Academic papers

Robots, manners and stress

Humans and other animals have evolved to be aware of whether we’re under threat. When we’re on safe territory with family and friends we relax, but when we sense that a rival or a predator might be nearby, our fight-or-flight response kicks in. Situational awareness is vital, as it’s just too stressful to be alert all the time.

We’ve started to realise that this is likely to be just as important in many machine-learning applications. Take as an example machine vision in an automatic driver assistance system, whose goal is automatic lane keeping and automatic emergency braking. Such systems use deep neural networks, as they perform way better than the alternatives; but they can be easily fooled by adversarial examples. Should we worry? Sure, a bad person might cause a car crash by projecting a misleading image on a motorway bridge – but they could as easily steal some traffic cones from the road works. Nobody sits up at night worrying about that. But the car industry does actually detune vision systems from fear of deceptive attacks!

We therefore started a thread of research aimed at helping machine-learning systems detect whether they’re under attack. Our first idea was the Taboo Trap. You raise your kids to observe social taboos – to behave well and speak properly – and yet once you send them to school they suddenly know words that would make your granny blush. The taboo violation shows they’ve been exposed to ‘adversarial inputs’, as an ML engineer would call them. So we worked out how to train a neural network to avoid certain taboo values, both of outputs (forbidden utterances) and intermediate activations (forbidden thoughts). The taboos can be changed every time you retrain the network, giving the equivalent of a cryptographic key. Thus even though adversarial samples will always exist, you can make them harder to find; an attacker can’t just find one that works against one model of car and use it against every other model. You can take a view, based on risk, of how many different keys you need.

We then showed how you can also attack the availability of neural networks using sponge examples – inputs designed to soak up as much energy, and waste as much time, as possible. An alarm can be simpler to build in this case: just monitor how long your classifier takes to run.

Are there broader lessons? We suspect so. As robots develop situational awareness, like humans, and react to real or potential attacks by falling back to a more cautious mode of operation, a hostile environment will cause the equivalent of stress. Sometimes this will be deliberate; one can imagine constant low-level engagement between drones at tense national borders, just as countries currently probe each others’ air defences. But much of the time it may well be a by-product of poor automation design coupled with companies hustling aggressively for consumers’ attention.

This suggests a missing factor in machine-learning research: manners. We’ve evolved manners to signal to others that our intent is not hostile, and to negotiate the many little transactions that in a hostile environment might lead to a tussle for dominance. Yet these are hard for robots. Food-delivery robots can become unpopular for obstructing and harassing other pavement users; and one of the show-stoppers for automated driving is the difficulty that self-driving cars have in crossing traffic, or otherwise negotiating precedence with other road users. And even in the military, manners have a role – from the chivalry codes of medieval knights to the more modern protocols whereby warships and warplanes warn other craft before opening fire. If we let loose swarms of killer drones with no manners, conflict will be more likely.

Our paper Situational Awareness and Machine Learning – Robots, Manners and Stress was invited as a keynote for two co-located events: IEEE CogSIMA and the NATO STO SCI-341 Research Symposium on Situation awareness of Swarms and Autonomous systems. We got so many conflicting demands from the IEEE that we gave up on making a video of the talk for them, and our paper was pulled from their proceedings. However we decided to put the paper online for the benefit of the NATO folks, who were blameless in this matter.

COVID-19 test provider websites and Cybersecurity: COVID briefing #22

This week’s COVID briefing paper (COVIDbriefing-22.pdf) resumes the Cybercrime Centre’s COVID briefing series, which began in July 2020 with the aim of sharing short on-going updates on the impacts of the pandemic on cybercrime.

The reason for restarting this series is a recent personal experience while navigating through the government’s requirements on COVID-19 testing for international travel. I observed great variation in the quality of website design and cannot help but put on my academic hat to report on what I found.

The quality of some websites is so poor that it hard to distinguish them from fraudulent sites — that is they have many of the features and characteristics that consumers have been warned to pay attention to. Compounded with the requirement to provide personally identifiable information there is a risk that fraudulent sites will indeed spring up and it will be unsurprising if consumers are fooled.

The government needs to set out minimum standards for the websites of firms that they approve to provide COVID-19 testing — especially with the imminent growth in demand that will come as the UK’s travel rules are eased.

Cybercrime is (still) (often) boring

Depictions of cybercrime often revolve around the figure of the lone ‘hacker’, a skilled artisan who builds their own tools and has a deep mastery of technical systems. However, much of the work involved is now in fact more akin to a deviant customer service or maintenance job. This means that exit from cybercrime communities is less often via the justice system, and far more likely to be a simple case of burnout.

Continue reading Cybercrime is (still) (often) boringData ordering attacks

Most deep neural networks are trained by stochastic gradient descent. Now “stochastic” is a fancy Greek word for “random”; it means that the training data are fed into the model in random order.

So what happens if the bad guys can cause the order to be not random? You guessed it – all bets are off. Suppose for example a company or a country wanted to have a credit-scoring system that’s secretly sexist, but still be able to pretend that its training was actually fair. Well, they could assemble a set of financial data that was representative of the whole population, but start the model’s training on ten rich men and ten poor women drawn from that set – then let initialisation bias do the rest of the work.

Does this generalise? Indeed it does. Previously, people had assumed that in order to poison a model or introduce backdoors, you needed to add adversarial samples to the training data. Our latest paper shows that’s not necessary at all. If an adversary can manipulate the order in which batches of training data are presented to the model, they can undermine both its integrity (by poisoning it) and its availability (by causing training to be less effective, or take longer). This is quite general across models that use stochastic gradient descent.

This work helps remind us that computer systems with DNN components are still computer systems, and vulnerable to a wide range of well-known attacks. A lesson that cryptographers have learned repeatedly in the past is that if you rely on random numbers, they had better actually be random (remember preplay attacks) and you’d better not let an adversary anywhere near the pipeline that generates them (remember injection attacks). It’s time for the machine-learning community to carefully examine their assumptions about randomness.

Three Paper Thursday: Subverting Neural Networks via Adversarial Reprogramming

This is a guest post by Alex Shepherd.

Five years after Szegedy et al. demonstrated the capacity for neural networks to be fooled by crafted inputs containing adversarial perturbations, Elsayed et al. introduced adversarial reprogramming as a novel attack class for adversarial machine learning. Their findings demonstrated the capacity for neural networks to be reprogrammed to perform tasks outside of their original scope via crafted adversarial inputs, creating a new field of inquiry for the fields of AI and cybersecurity.

Their discovery raised important questions regarding the topic of trustworthy AI, such as what the unintended limits of functionality are in machine learning models and whether the complexity of their architectures can be advantageous to an attacker. For this Three Paper Thursday, we explore the three most eminent papers concerning this emerging threat in the field of adversarial machine learning.

Adversarial Reprogramming of Neural Networks, Gamaleldin F. Elsayed, Ian Goodfellow, and Jascha Sohl-Dickstein, International Conference on Learning Representations, 2018.

In their seminal paper, Elsayed et al. demonstrated their proof-of-concept for adversarial reprogramming by successfully repurposing six pre-trained ImageNet classifiers to perform three alternate tasks via crafted inputs containing adversarial programs. Their threat model considered an attacker with white-box access to the target models, whose objective was to subvert the models by repurposing them to perform tasks they were not originally intended to do. For the purposes of their hypothesis testing, adversarial tasks included counting squares and classifying MNIST digits and CIFAR-10 images.

Continue reading Three Paper Thursday: Subverting Neural Networks via Adversarial Reprogramming

Friendly neighbourhood cybercrime: online harm in the pandemic and the futures of cybercrime policing

As cybercrime researchers we’re often focused on the globalised aspects of online harms – how the Internet connects people and services around the world, opening up opportunities for crime, risk, and harm on a global scale. However, as we argue in open access research published this week in the Journal of Criminal Psychology in collaboration between the Cambridge Cybercrime Centre (CCC), Edinburgh Napier University, the University of Edinburgh, and Abertay University, as we have seen an enormous rise in reported cybercrime in the pandemic, we have paradoxically seen this dominated by issues with a much more local character. Our paper sketches a past: of cybercrime in a turbulent 2020, and a future: of the roles which state law enforcement might play in tackling online harm a post-pandemic world.

Continue reading Friendly neighbourhood cybercrime: online harm in the pandemic and the futures of cybercrime policingAn exploration of the cybercrime ecosystem around Shodan

By Maria Bada & Ildiko Pete

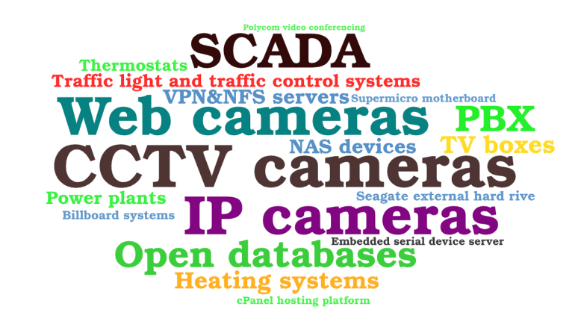

Internet of Things (IoT) solutions, which have permeated our everyday life, present a wide attack surface. They are present in our homes in the form of smart home solutions, and in industrial use cases where they provide automation. The potentially profound effects of IoT attacks have attracted much research attention. We decided to analyse the IoT landscape from a novel perspective, that of the hacking community.

Our recent paper published at the 7th IEEE International Conference on Internet of Things: Systems, Management and Security (IOTSMS 2020) presents an analysis of underground forum discussions around Shodan, one of the most popular search engines of Internet facing devices and services. In particular, we explored the role Shodan plays in the cybercriminal ecosystem of IoT hacking and exploitation, the main motivations of using Shodan, and popular targets of exploits in scenarios where Shodan is used.

To answer these questions, we followed a qualitative approach and performed a thematic analysis of threads and posts extracted from 19 underground forums presenting discussions from 2009 to 2020. The data were extracted from the CrimeBB dataset, collected and made available to researchers through a legal agreement by the Cambridge Cybercrime Centre (CCC). Specifically, the majority of posts we analysed stem from Hackforums (HF), one of the largest general purpose hacking forums covering a wide range of topics, including IoT. HF is also notable for being the platform where the source code of the Mirai malware was released in 2016 (Chen and Y. Luo, 2017).

The analysis revealed that Shodan provides easier access to targets and simplifies IoT hacking. This is demonstrated for example by discussions that centre around selling and buying Shodan exports, search results that can be readily used to target vulnerable devices and services. Forum members also expressed this view directly:

‘… Shodan and other tools, such as exploit-db make hacking almost like a recipe that you can follow.’

From the perspective of hackers a significant factor determining the utility of Shodan is if those targets can indeed be utilised. For example, whether all scanned hosts in scan results are active and whether they can be used for exploitation. Thus, the value of Shodan as a hacking tool is determined by its intended use cases.

The discussions were ripe with tutorials on various aspects of hacking, which provided a glimpse into the methodology of hacking in general, hacking IoT devices, and the role Shodan plays in IoT attacks. The discussions show that Shodan and similar tools, such as Censys and Zoomeye, play a key role in passive information gathering and reconnaissance. The majority of users agree that Shodan provides value and is a useful tool and do suggest its use. They mention Shodan both in the context of searching for targets and exploiting devices or services with known vulnerabilities. As to the targets of information gathering and exploitation, we found multiple devices and services, including web cameras, industrial control systems, open databases, to mention a few.

Shodan is a versatile tool and plays a prominent role in various use cases. Since IoT devices can potentially expose personally identifiable information, such as health records, user names and passwords, members of underground forums actively discuss utilising Shodan for gathering such data. In particular, this can be achieved by exploiting open databases.

Members of forums discuss accessing remote devices for various reasons. In some cases, it is for fun, while more maliciously inclined actors can use such exploits to collect images and videos and use them in for example extortion use cases. Previous research has shown that camera systems represent easy targets for hackers. Accordingly, our findings highlight that these systems are one of the most popular targets, and they are widely discussed in the context of watching the video stream or listening to the audio stream of a compromised vulnerable cameras, or exposing someone through their camera recording. Users frequently discuss IP camera trolling, and we found posts sharing leaked video footage and websites that list hacked cameras.

Shodan, and in particular the Shodan API can be used to automate scanning for devices which could be used to create a botnet:

‘…you don’t need fancy exploits to get bots just look for bad configurations on shodan.’

And finally, a major use case member discusses utilising Shodan in Distributed Reflection Denial of Service attacks, and specifically in the first step where Shodan can be used to gather a list of reflectors, for example, NTP servers.

Discussions around selling or buying Shodan accounts show that forum members trade these accounts and associated assets due to Shodan’s credit model, which limits its use. To effectively utilise the output of Shodan queries, premium accounts are required as they provide the necessary scan, query and export credits.

Although Shodan and other search engines alike attract malicious actors, they are widely used by security professionals and for penetration testing to unveil IoT security issues. Raising awareness of vulnerabilities provides invaluable help in alleviating these issues. Shodan provides a variety of services, including Malware Hunter, which is a specialised Shodan crawler aimed at discovering malware command-and-control (CC) servers. The service is of great value to security professionals and in the fight against malware reducing its impact and ability to compromise targeted victims. This study contributes to IoT security research by highlighting the need for action towards securing the IoT ecosystem based on forum members’ discussions on underground forums. The findings suggest that more focus needs to be placed upon the security considerations while developing IoT devices, as a measure to prevent their malicious use.

Reference

F. Chen and Y. Luo, Industrial IoT Technologies and Applications: Second EAI International Conference, Industrial IoT 2017, Wuhu, China, March 25–26, 2017, Proceedings, ser. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering. Springer International Publishing, 2017.

Pushing the limits: acoustic side channels

How far can we go with acoustic snooping on data?

Seven years ago we showed that you could use a phone camera to measure the phone’s motion while typing and use that to recover PINs. Four years ago we showed that you could use interrupt timing to recover text entered using gesture typing. Last year we showed how a gaming app can steal your banking PIN by listening to the vibration of the screen as your finger taps it. In that attack we used the on-phone microphones, as they are conveniently located next to the screen and can hear the reverberations of the screen glass.

This year we wondered whether voice assistants can hear the same taps on a nearby phone as the on-phone microphones could. We knew that voice assistants could do acoustic snooping on nearby physical keyboards, but everyone had assumed that virtual keyboards were so quiet as to be invulnerable.

Almos Zarandy, Ilia Shumailov and I discovered that attacks are indeed possible. In Hey Alexa what did I just type? we show that when sitting up to half a meter away, a voice assistant can still hear the taps you make on your phone, even in presence of noise. Modern voice assistants have two to seven microphones, so they can do directional localisation, just as human ears do, but with greater sensitivity. We assess the risk and show that a lot more work is needed to understand the privacy implications of the always-on microphones that are increasingly infesting our work spaces and our homes.

Three Paper Thursday: Vēnī, Vīdī, Vote-y – Election Security

With the recent quadrennial instantiation of the US presidential election, discussions of election security have predictably resurged across much of the world. Indeed, news cycles in the US, UK, and EU abound with talking points surrounding the security of elections. In light of this context, we will use this week’s Three Paper Thursday to shed light on the technical challenges, solutions, and opportunities in designing secure election systems.

This post will focus on the technical security of election systems. That said, the topic of voter manipulation techniques such as disinformation campaigns, although out of scope here, is also an open area of research.

At first glance, voting may not seem like a challenging problem. If we are to consider a simple majority vote, surely a group of young schoolchildren could reach a consensus in minutes via hand-raising. Striving for more efficient vote tallying, though, perhaps we may opt to follow the IETF in consensus through humming. As we seek a solution that can scale to large numbers of voters, practical limitations will force us to select a multi-location, asynchronous process. Whether we choose in-person polling stations or mail-in voting, challenges quickly develop: how do we know a particular vote was counted, its contents kept secret, and the final tally correct?

National Academies of Sciences, Engineering, and Medicine (U.S.), Ed., Securing the vote: protecting American democracy, The National Academies Press (2018)

The first paper is particularly prominent due to its unified, no-nonsense, and thorough analysis. The report is specific to the United States, but its key themes apply generally. Written in response to accusations of international interference in the US 2016 presidential election, the National Academies provide 41 recommendations to strengthen the US election system.

These recommendations are extremely straightforward, and as such a reminder that adversaries most often penetrate large systems by targeting the “weakest link.” Among other things, the authors recommend creating standardized ballot data formats, regularly validating voter registration lists, evaluating the accessibility of ballot formats, ensuring access to absentee ballots, conducting appropriate audits, and providing adequate funding for elections.

It’s important to get the basics right. While there are many complex, stimulating proposals that utilize cutting-edge algorithms, cryptography, and distributed systems techniques to strengthen elections, many of these proposals are moot if the basic logistics are mishandled.

Some of these low-tech recommendations are, to the surprise of many passionate technologists, quite common among election security specialists. For example, requiring a paper ballot trail and avoiding internet voting based on current technology is also cited in our next paper.

Matthew Bernhard et al., Public Evidence from Secret Ballots, arXiv:1707.08619 (2017)

Governance aside, the second paper offers a comprehensive survey of the key technical challenges in election security and common tools used to solve them. The paper motivates the difficulty of election systems by attesting that all actors involved in an election are mutually distrustful, meaningful election results require evidence, and voters require ballot secrecy.

Ballot secrecy is more than a nicety; it is key to a properly functioning election system. Implemented correctly, ballot secrecy prevents voter coercion. If a voter’s ballot is not secret, or indeed if there is any way a voter can post-facto prove the casting a certain vote, malicious actors may pressure the voter to provide proof that they voted as directed. This can be insidiously difficult to prevent if not considered thoroughly.

Bernhard et al. discuss risk-limiting audits (RLAs) as an efficient yet powerful way to limit uncertainty in election results. By sampling and recounting a subset of votes, RLAs enable the use of statistical methods to increase confidence in a correct ballot count. Employed properly, RLAs can enable the high-probability validation of election tallies with effort inversely proportional to the expected margin. RLAs are now being used in real-world elections, and many RLA techniques exist in practice.

Refreshingly, this paper establishes that blockchain-based voting is a bad idea. Blockchains inherently lack a central authority, so enforcing election rules would be a challenge. Furthermore, a computationally powerful adversary could control which votes get counted.

The paper also discusses high-level cryptographic tools that can be useful in elections. This leads us to our third and final paper.

Josh Benaloh, ElectionGuard Specification v0.95, Microsoft GitHub (2020)

Our final paper is slightly different from the others in this series; it’s a snapshot of a formal specification that is actively being developed, largely based on the author’s 1996 Yale doctoral thesis.

The specification describes ElectionGuard, a system being built by Microsoft to enable verifiable election results (disclaimer: the author of this post holds a Microsoft affiliation). It uses a combination of exponential ElGamal additively-homomorphic encryption, zero knowledge proofs, and Shamir’s secret sharing to conduct publicly-verifiable, secret-ballot elections.

When a voter casts a ballot, they are given a tracking code which can be used to verify the counting of the ballot’s votes via cryptographic proofs published with the final tally. Voters can achieve high confidence that their ballot represents a proper encryption of their desired votes by optionally spoiling an unlimited number of ballots – triggering a decryption of the spoiled ballot – at the time of voting. Encrypted ballots are homomorphically tallied in encrypted form by the election authorities, and the number of authorities that participate in tallying must meet the threshold set for the election to protect against malicious authorities.

The specification does not require that the system be used for exclusively internet-based or polling station-based elections; rather it is a framework for users to consume as they wish. Indeed, one of the draws to ElectionGuard is that it does not mandate a specific UI, ballot marking device, or even API. This flexibility allows election authorities to leverage the system in the manner that best fits their jurisdiction. The open source implementation can be found on GitHub.

There are many pieces of voting software available, but ElectionGuard is the new kid on the block that addresses many of the concerns raised in our earlier papers.

Key Themes

Designing secure election systems is difficult.

Often, election systems fall short on the basics; improper voting lists, postage issues, and poorly formatted ballots can disrupt elections as much as some adversaries. Ensuring that the foundational components of an election are handled well currently involves seemingly mundane – but important – things such as paper ballot trails, chains of custody, and voter ID verification.

High-tech election proposals are not new; indeed key insights into the use of cryptographic techniques in elections were being discussed in the academic literature well over two decades ago. That said, in recent years there has been an ostensibly increased investment in implementing cryptographic election systems, and although there remain many problems to be solved the future in this area looks promising.